48 problems from 37 repositories selected within the current time window.

You can adjust time window, modifying the problems' release start and end dates. Evaluations highlighted in red may be potentially contaminated, meaning they include tasks that were created before the model's release date. Evaluations highlighted in orange display evaluations of external systems for reference. If a selected time window is wider than the range of task dates a model was evaluated on, the model will appear at the bottom of the leaderboard with N/A values.

Insights

December 2025

- In our evaluations, Gemini 3 Flash Preview slightly outperformed Gemini 3 Pro Preview on pass@1 (57.6% vs 56.5%), despite being the smaller and cheaper model. This mirrors Google’s own SWE-bench Verified results and its description of Flash as a model designed for coding and agentic workflows that emphasize rapid, iterative development and fast feedback loops.

- GLM-4.7 stands out as the strongest open-source model on our leaderboard, ranking alongside closed models like GPT-5.1-codex.

- GPT-OSS-120B saw a large jump in performance when run in high-effort reasoning mode, nearly doubling its resolved rate compared to its standard configuration, highlighting how much inference-time scaling can matter.

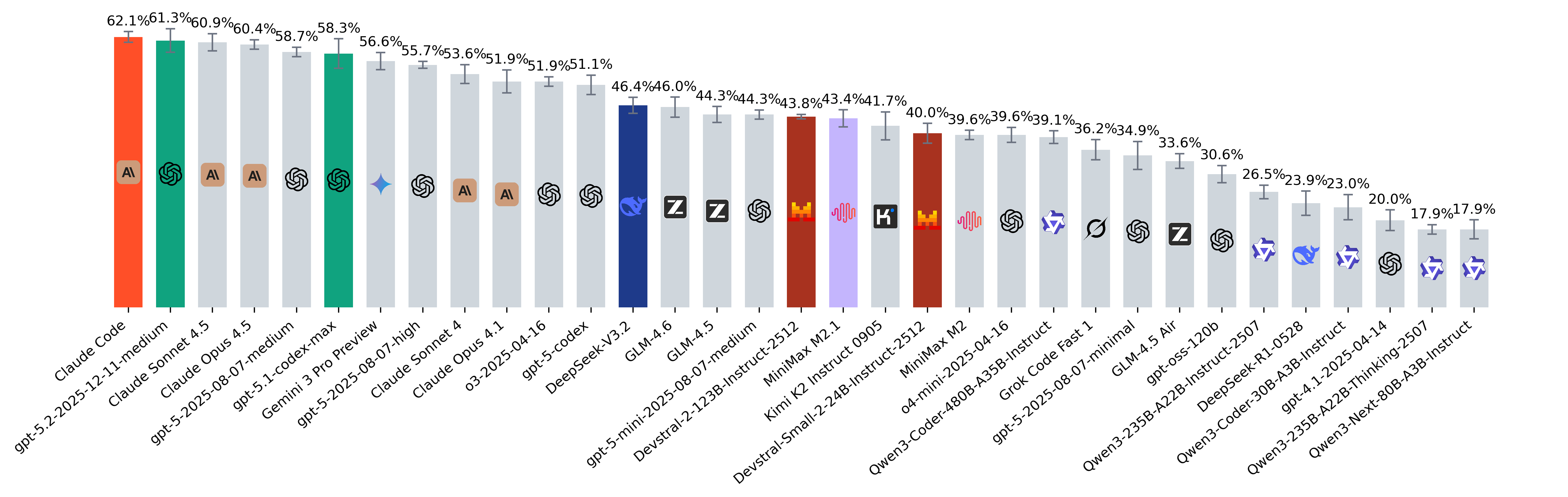

- We hypothesize that Claude Code's ranking relative to Claude Opus 4.5 may be influenced by its reliance on Claude Haiku 4.5 for executing specific agent actions. It is possible that offloading frequent tasks to the smaller model limits overall performance, even with Opus providing high-level guidance.

November 2025

- For Claude Code, we follow the default recommendation of running the agent in headless mode and using Opus 4.5 as the primary model:

--model=opus --allowedTools="Bash,Read" --permission-mode acceptEdits --output-format stream-json --verbose. This resulted in a mixed execution pattern where Opus 4.5 handles core reasoning and Haiku 4.5 is delegated auxiliary tasks. Across trajectories, ~30% of steps originate from Haiku, with the remaining majority from Opus 4.5. We use version 2.0.62 of Claude Code. In rare instances (1–2 out of 47 tasks), Claude Code attempts to use prohibited tools like WebFetch or user approval, resulting in timeouts and task failure. - GPT-5.2 reaches top-tier performance, matching Claude 4.5 Sonnet / Opus on agentic tasks while using significantly fewer tokens per problem, making it one of the most balanced models this month in terms of capability versus efficiency.

- Gemini 3 Pro shows a major leap in agentic performance compared to Gemini 2.5 Pro and firmly joins the group of leading models.

- DeepSeek v3.2 achieves SOTA among open-weight models. At the same time, it uses the highest number of tokens per problem across all evaluated models, suggesting a very expansive reasoning style.

- Devstral 2 123B model falls into the same performance range as other mid-tier systems, while the 24B variant remains relatively close despite a large size gap. Devstral 2 models are evaluated via self-hosted vLLM. Cost per Problem is omitted due to the lack of publicly available reference pricing.

- All OpenAI models are now evaluated via the Responses API with keeping reasoning items in context.

- As in previous updates, we also track least frequently solved problems. This month, the hardest tasks are tobymao/sqlglot-6374 and sympy/sympy-28660.

October 2025

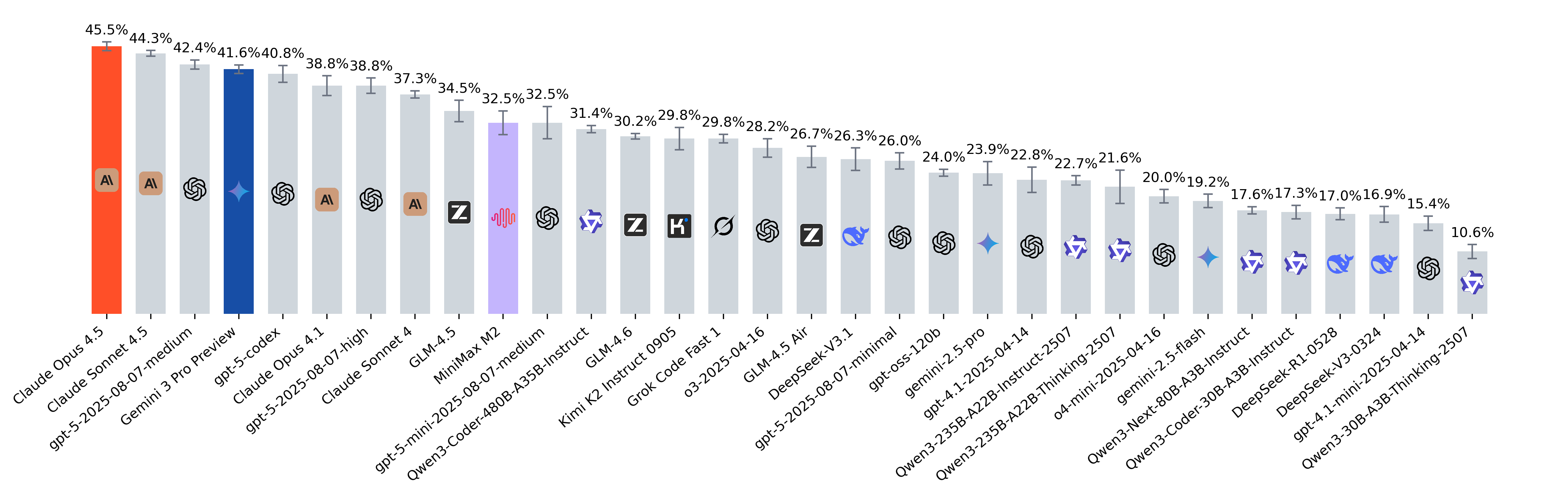

- [Update] While the cumulative Pass@5 across all models stands at 72.5%, the combination of Opus 4.5, GPT-5 Codex, and Gemini 3 Pro reached 58.8%. Notably, marimo-team/marimo-6629, getmoto/moto-9350 and piskvorky/smart_open-892 were the least frequently solved problems.

- [Update] We re-ran the MiniMax M2 model through the official API platform with token caching enabled, which is not supported on OpenRouter for now. We also added a new column, Cached Tokens, which shows the average percentage of cached tokens in the token usage.

- [Update] Claude Opus 4.5 has claimed the #1 spot on the leaderboard. Remarkably for a flagship model, it is only slightly more expensive per problem than Sonnet 4.5 (1.15 vs 0.98).

- Claude Sonnet 4.5 delivers the strongest pass@1 and pass@5 results, and it uses more tokens per problem than gpt-5-medium and gpt-5-high despite not running in reasoning mode. This indicates that Sonnet 4.5 adapts its reasoning depth internally and uses its capacity efficiently.

- GPT-5 variants differ in how often they invoke reasoning: gpt-5-medium uses it in ~58% of steps (avg. 714 tokens), while gpt-5-high increases this to ~62% (avg. 1053 tokens). However, this additional reasoning does not translate into better task-solving ability in our setup: gpt-5-medium achieves a pass@5 of 49.0%, compared to 47.1% for gpt-5-high.

- MiniMax M2 is the most cost-efficient open-source model among the top performers. Its pricing is $0.255 / $1.02 per 1M input/output tokens, whereas gpt-5-codex costs $1.25 / $10.00 – with cached input available at just $0.125. In agentic workflows, where large trajectory prefixes are reused, this cache advantage can make models with cheap cache reads more beneficial even if their raw input/output prices are higher. In case of gpt-5-codex, it has approximately the same Cost per Problem as MiniMax M2 ($0.51 vs $0.44), being yet much more powerful.

- GLM-4.6 reaches the agent’s maximum step limit (80 steps in our setup) roughly twice as often as GLM-4.5. This suggests its performance may be constrained by the step budget, and increasing the limit could potentially improve its resolved rate.

September 2025

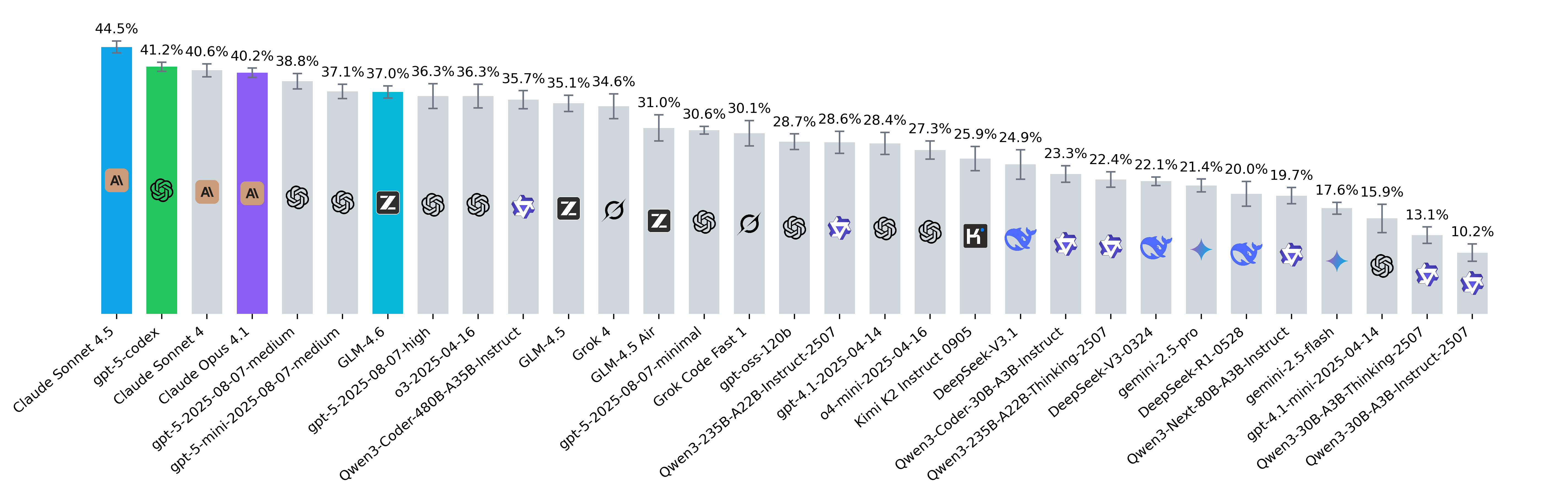

- Claude Sonnet 4.5 demonstrated notably strong generalization and problem coverage, achieving the highest pass@5 (55.1%) and uniquely solving several instances that no other model on the leaderboard managed to resolve: python-trio/trio-3334, cubed-dev/cubed-799, canopen-python/canopen-613.

- Grok Code Fast 1 and gpt-oss-120b stand out as ultra-efficient budget options, delivering around 29%–30% resolved rate for only $0.03–$0.04 per problem.

- We observed that Anthropic models (e.g., Claude Sonnet 4) do not use caching by default, unlike other frontier models. Proper use of caching dramatically reduces inference costs – for instance, the average per-problem cost for Claude Sonnet 4 dropped from $5.29 in our August release to just $0.91 in September. All Anthropic models in the current September release were evaluated with caching enabled, ensuring cost figures are now directly comparable to other frontier models.

- All models on the leaderboard were evaluated using the ChatCompletions API, except for gpt-5-codex and gpt-oss-120b, which are only accessible via the Responses API. The Responses API natively supports reasoning models and allows linking to previous responses through unique references. This mechanism leverages the model's internal reasoning context from earlier steps – a feature turned out to be beneficial for agentic systems that require multi-step reasoning continuity.

- We also evaluated gpt-5-medium with reasoning context reuse enabled via the Responses API, where it achieved a resolved rate of 41.2% and pass@5 of 51%. However, to maintain fairness, we excluded these results from the leaderboard since other reasoning-capable models currently do not have reasoning-context reuse enabled within our evaluation framework. We're interested in evaluating all frontier models with preserving reasoning context from earlier steps to validate how their performance changes.

- In our evaluation, we observed that gpt-5-high performed worse than gpt-5-medium. We initially attributed this to the agent's maximum step limit, theorizing that gpt-5-high requires more steps to run tests and check corner cases. However, doubling the max_step_limit from its default of 80 to 160 yielded only a slight performance increase (pass@1: 36.3% -> 38.3%, pass@5: 46.9% -> 48.9%). An alternative hypothesis, which we will validate shortly, is that gpt-5-high benefits especially from using its previous reasoning steps.

August 2025

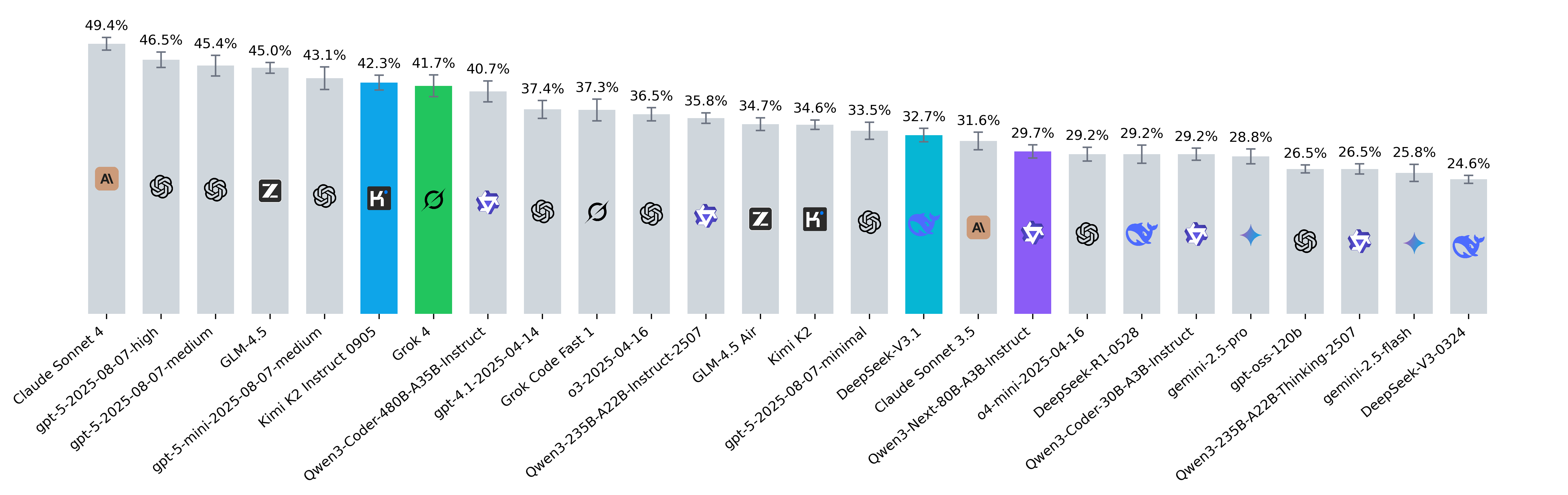

- Kimi-K2 0915 has grown significantly (34.6% -> 42.3% increase in resolved rate) and is now in the top 3 open-source models.

- DeepSeek V3.1 also improved, though less dramatically. At the same time, the number of tokens produced has grown almost 4x.

- Qwen3-Next-80B-A3B-Instruct, despite not being trained directly for coding, performs on par with the 30B-Coder. To reflect models speed, we’re also thinking about how best to report efficiency metrics such as tokens/sec on the leaderboard.

- Finally, Grok 4: the frontier model from xAI has now entered the leaderboard and is among the top performers.

Rank | Model | Resolved Rate (%) | Resolved Rate SEM (±) | Pass@5 (%) | Cost per Problem ($) | Tokens per Problem | Cached Tokens (%) |

|---|---|---|---|---|---|---|---|

| 1 | Claude Opus 4.5 | 63.3% | 1.41% | 79.2% | $1.22 | 1,449,298 | 95.2% |

| 2 | gpt-5.2-2025-12-11-xhigh | 61.5% | 1.21% | 70.8% | $1.46 | 1,823,287 | 67.2% |

| 3 | Gemini 3 Flash Preview | 60.0% | 2.59% | 72.9% | $0.29 | 1,926,624 | 74.5% |

| 4 | gpt-5.2-2025-12-11-medium | 59.4% | 0.81% | 70.8% | $0.86 | 913,358 | 56.1% |

| 5 | Gemini 3 Pro Preview | 58.9% | 2.18% | 68.8% | $0.60 | 1,152,978 | 81.3% |

| 6 | Claude Sonnet 4.5 | 57.5% | 0.83% | 75.0% | $0.98 | 1,988,411 | 96.4% |

| 7 | gpt-5.1-codex-max | 56.7% | 1.79% | 70.8% | $0.63 | 1,403,090 | 75.1% |

| 8 | Claude Code | 56.7% | 1.67% | 70.8% | $1.25 | 1,741,403 | 91.7% |

| 9 | gpt-5.1-codex | 54.6% | 1.79% | 64.6% | $0.62 | 1,577,354 | 79.5% |

| 10 | GLM-4.7 | 51.3% | 1.40% | 66.7% | $0.40 | 1,865,305 | 79.8% |

| 11 | DeepSeek-V3.2 | 48.5% | 1.19% | 68.8% | $0.25 | 2,061,251 | 63.6% |

| 12 | gpt-5-mini-2025-08-07-high | 44.3% | 1.50% | 60.4% | $0.71 | 1,572,962 | 61.8% |

| 13 | gpt-5-mini-2025-08-07-medium | 40.7% | 1.46% | 52.1% | $0.32 | 1,086,010 | 55.0% |

| 14 | Kimi K2 Thinking | 40.5% | 1.20% | 60.4% | $0.48 | 2,062,604 | 90.3% |

| 15 | GLM-4.6 | 40.1% | 2.82% | 64.6% | $0.30 | 1,849,712 | 89.6% |

| 16 | Kimi K2 Instruct 0905 | 38.4% | 1.42% | 60.4% | $0.31 | 1,761,770 | 95.2% |

| 17 | Qwen3-Coder-480B-A35B-Instruct | 38.3% | 2.92% | 62.5% | $0.28 | 1,399,124 | 95.3% |

| 18 | MiniMax M2.1 | 37.3% | 2.59% | 58.3% | $0.10 | 1,539,664 | 89.8% |

| 19 | gpt-oss-120b-high | 37.0% | 3.53% | 62.5% | $0.26 | 1,673,241 | 91.4% |

| 20 | Devstral-2-123B-Instruct-2512 | 36.6% | 1.41% | 59.6% | $0.09 | 1,724,981 | 94.2% |

| 21 | Grok Code Fast 1 | 35.9% | 1.22% | 54.2% | $0.08 | 1,108,107 | 73.9% |

| 22 | GLM-4.5 Air | 34.1% | 2.50% | 50.0% | $0.12 | 1,684,231 | 79.0% |

| 23 | Devstral-Small-2-24B-Instruct-2512 | 34.1% | 1.13% | 54.2% | $0.12 | 1,969,493 | 94.8% |

| 24 | DeepSeek-R1-0528 | 26.0% | 2.17% | 46.8% | $0.44 | 468,360 | 0.0% |

| 25 | Qwen3-235B-A22B-Instruct-2507 | 24.6% | 1.54% | 39.6% | $0.17 | 860,136 | 0.0% |

| 26 | Qwen3-Coder-30B-A3B-Instruct | 23.0% | 1.75% | 37.5% | $0.07 | 674,904 | 0.0% |

| 27 | gpt-oss-120b | 22.7% | 2.25% | 39.6% | $0.14 | 875,894 | 89.4% |

| 28 | Qwen3-Next-80B-A3B-Instruct | 19.9% | 2.69% | 37.5% | $0.33 | 643,605 | 0.0% |

| 29 | Qwen3-30B-A3B-Instruct-2507 | 11.5% | 1.38% | 25.0% | $0.13 | 1,239,588 | 0.0% |

| 30 | Claude Opus 4.1 | N/A | N/A | N/A | N/A | N/A | N/A |

| 31 | Claude Sonnet 3.5 | N/A | N/A | N/A | N/A | N/A | N/A |

| 32 | Claude Sonnet 4 | N/A | N/A | N/A | N/A | N/A | N/A |

| 33 | DeepSeek-V3 | N/A | N/A | N/A | N/A | N/A | N/A |

| 34 | DeepSeek-V3-0324 | N/A | N/A | N/A | N/A | N/A | N/A |

| 35 | DeepSeek-V3-0324 | N/A | N/A | N/A | N/A | N/A | N/A |

| 36 | DeepSeek-V3.1 | N/A | N/A | N/A | N/A | N/A | N/A |

| 37 | Devstral-Small-2505 | N/A | N/A | N/A | N/A | N/A | N/A |

| 38 | gemini-2.0-flash | N/A | N/A | N/A | N/A | N/A | N/A |

| 39 | gemini-2.0-flash | N/A | N/A | N/A | N/A | N/A | N/A |

| 40 | gemini-2.5-flash | N/A | N/A | N/A | N/A | N/A | N/A |

| 41 | gemini-2.5-flash-preview-05-20 no-thinking | N/A | N/A | N/A | N/A | N/A | N/A |

| 42 | gemini-2.5-flash-preview-05-20 no-thinking | N/A | N/A | N/A | N/A | N/A | N/A |

| 43 | gemini-2.5-pro | N/A | N/A | N/A | N/A | N/A | N/A |

| 44 | gemma-3-27b-it | N/A | N/A | N/A | N/A | N/A | N/A |

| 45 | GLM-4.5 | N/A | N/A | N/A | N/A | N/A | N/A |

| 46 | gpt-4.1-2025-04-14 | N/A | N/A | N/A | N/A | N/A | N/A |

| 47 | gpt-4.1-2025-04-14 | N/A | N/A | N/A | N/A | N/A | N/A |

| 48 | gpt-4.1-mini-2025-04-14 | N/A | N/A | N/A | N/A | N/A | N/A |

| 49 | gpt-4.1-mini-2025-04-14 | N/A | N/A | N/A | N/A | N/A | N/A |

| 50 | gpt-4.1-nano-2025-04-14 | N/A | N/A | N/A | N/A | N/A | N/A |

| 51 | gpt-5-2025-08-07-high | N/A | N/A | N/A | N/A | N/A | N/A |

| 52 | gpt-5-2025-08-07-medium | N/A | N/A | N/A | N/A | N/A | N/A |

| 53 | gpt-5-2025-08-07-minimal | N/A | N/A | N/A | N/A | N/A | N/A |

| 54 | gpt-5-codex | N/A | N/A | N/A | N/A | N/A | N/A |

| 55 | gpt-oss-20b | N/A | N/A | N/A | N/A | N/A | N/A |

| 56 | Grok 4 | N/A | N/A | N/A | N/A | N/A | N/A |

| 57 | horizon-alpha | N/A | N/A | N/A | N/A | N/A | N/A |

| 58 | horizon-beta | N/A | N/A | N/A | N/A | N/A | N/A |

| 59 | Kimi K2 | N/A | N/A | N/A | N/A | N/A | N/A |

| 60 | Llama-3.3-70B-Instruct | N/A | N/A | N/A | N/A | N/A | N/A |

| 61 | Llama-4-Maverick-17B-128E-Instruct | N/A | N/A | N/A | N/A | N/A | N/A |

| 62 | Llama-4-Scout-17B-16E-Instruct | N/A | N/A | N/A | N/A | N/A | N/A |

| 63 | MiniMax M2 | N/A | N/A | N/A | N/A | N/A | N/A |

| 64 | o3-2025-04-16 | N/A | N/A | N/A | N/A | N/A | N/A |

| 65 | o4-mini-2025-04-16 | N/A | N/A | N/A | N/A | N/A | N/A |

| 66 | Qwen2.5-72B-Instruct | N/A | N/A | N/A | N/A | N/A | N/A |

| 67 | Qwen2.5-Coder-32B-Instruct | N/A | N/A | N/A | N/A | N/A | N/A |

| 68 | Qwen3-235B-A22B | N/A | N/A | N/A | N/A | N/A | N/A |

| 69 | Qwen3-235B-A22B no-thinking | N/A | N/A | N/A | N/A | N/A | N/A |

| 70 | Qwen3-235B-A22B thinking | N/A | N/A | N/A | N/A | N/A | N/A |

| 71 | Qwen3-235B-A22B-Thinking-2507 | N/A | N/A | N/A | N/A | N/A | N/A |

| 72 | Qwen3-30B-A3B-Thinking-2507 | N/A | N/A | N/A | N/A | N/A | N/A |

| 73 | Qwen3-32B | N/A | N/A | N/A | N/A | N/A | N/A |

| 74 | Qwen3-32B no-thinking | N/A | N/A | N/A | N/A | N/A | N/A |

| 75 | Qwen3-32B thinking | N/A | N/A | N/A | N/A | N/A | N/A |

News

- [2026-01-14]:

- Added new models to the leaderboard: gpt-5.2-2025-12-11-xhigh, gpt-5.1-codex, GLM-4.7, gpt-5-mini-2025-08-07-high, gpt-oss-120b-high, Kimi K2 Thinking.

- Deprecated following models: gpt-5-2025-08-07-medium, gpt-5-2025-08-07-high, Claude Sonnet 4, Claude Opus 4.1, o3-2025-04-16, gpt-5-codex, GLM-4.5, o4-mini-2025-04-16, gpt-5-2025-08-07-minimal, gpt-4.1-2025-04-14, Qwen3-235B-A22B-Thinking-2507, gpt-4.1-mini-2025-04-14, Qwen3-30B-A3B-Thinking-2507.

- [2025-12-22]:

- Added new model to the leaderboard: MiniMax M2.1.

- [2025-12-17]:

- Added new models to the leaderboard: gpt-5.1-codex-max, gpt-5.2-2025-12-11-medium, Devstral-2-123B-Instruct-2512, Devstral-Small-2-24B-Instruct-2512, DeepSeek-V3.2.

- Added reference evaluation for Claude Code (highlighted in orange). See setup details in Insights.

- Deprecated following models: gemini-2.5-pro, gemini-2.5-flash, DeepSeek-V3.1.

- [2025-12-08]:

- Added new model to the leaderboard: Gemini 3 Pro Preview.

- [2025-12-05]:

- Introduced Cached Tokens column.

- [2025-11-25]:

- Added new model to the leaderboard: Claude Opus 4.5.

- [2025-11-13]:

- Added new model to the leaderboard: MiniMax M2.

- [2025-10-28]:

- Added new model to the leaderboard: GLM-4.6.

- [2025-10-09]:

- Added new models to the leaderboard: Claude Sonnet 4.5, gpt-5-codex, Claude Opus 4.1, Qwen3-30B-A3B-Thinking-2507 and Qwen3-30B-A3B-Instruct-2507.

- Added a new Insights section providing analysis and key takeaways from recent model and data releases.

- Deprecated following models:

- Text: Llama-3.3-70B-Instruct, Llama-4-Maverick-17B-128E-Instruct, gemma-3-27b-it and Qwen2.5-72B-Instruct.

- Tools: Claude Sonnet 3.5, Kimi K2, gemini-2.0-flash, Qwen3-235B-A22B and Qwen3-32B.

- [2025-09-17]:

- Added new models to the leaderboard: Grok 4, Kimi K2 Instruct 0905, DeepSeek-V3.1 and Qwen3-Next-80B-A3B-Instruct.

- [2025-09-04]:

- Added new models to the leaderboard: GLM-4.5, GLM-4.5 Air, Grok Code Fast 1, Kimi K2, gpt-5-mini-2025-08-07-medium, gpt-oss-120b and gpt-oss-20b.

- Introduced Cost per Problem and Tokens per Problem columns.

- Added links to the pull requests within the selected time window. You can review them via the

Inspectbutton. - Deprecated following models:

- Text: DeepSeek-V3, DeepSeek-V3-0324, Devstral-Small-2505, gemini-2.0-flash, gpt-4.1-2025-04-14, gpt-4.1-mini-2025-04-14, gpt-4.1-nano-2025-04-14, Llama-4-Scout-17B-16E-Instruct and Qwen2.5-Coder-32B-Instruct.

- Tools: horizon-alpha and horizon-beta.

- [2025-08-12]: Added new models to the leaderboard: gpt-5-medium-2025-08-07, gpt-5-high-2025-08-07 and gpt-5-minimal-2025-08-07.

- [2025-08-02]: Added new models to the leaderboard: Qwen3-Coder-30B-A3B-Instruct, horizon-beta.

- [2025-07-31]:

- Added new models to the leaderboard: gemini-2.5-pro, gemini-2.5-flash, o4-mini-2025-04-16, Qwen3-Coder-480B-A35B-Instruct, Qwen3-235B-A22B-Thinking-2507, Qwen3-235B-A22B-Instruct-2507, DeepSeek-R1-0528 and horizon-alpha.

- Deprecated models: gemini-2.5-flash-preview-05-20 no-thinking.

- Updated demo format: tool calls are now shown as distinct assistant and tool messages.

- [2025-07-11]: Released Docker images for all leaderboard problems and published a dedicated HuggingFace dataset containing only the problems used in the leaderboard.

- [2025-07-10]: Added models performance chart and evaluations on June data.

- [2025-06-12]: Added tool usage support, evaluations on May data and new models: Claude Sonnet 3.5/4 and o3.

- [2025-05-22]: Added Devstral-Small-2505 to the leaderboard.

- [2025-05-21]: Added new models to the leaderboard: gpt-4.1-mini-2025-04-14, gpt-4.1-nano-2025-04-14, gemini-2.0-flash and gemini-2.5-flash-preview-05-20.